When it comes to nuclear, energy, and environmental work—there’s no room for guesswork.

In today’s fast-paced professional world, where timelines are short and the information we rely on must be accurate, many teams are turning to artificial intelligence to support research and reporting. But in industries where compliance, safety, and regulatory integrity are non-negotiable, the source of that information matters just as much—if not more—than the speed of the answer.

That’s where AtomAssist comes in.

Designed for engineers, field professionals, analysts, and managers in highly regulated fields like nuclear and utilities, AtomAssist was created to solve a specific problem: helping users access, understand, and trust their own documents and data—faster and more reliably than ever before.

A First-Hand Use Case from Deep Fission

During a recent session, Ingrid Nordby of Deep Fission walked through how she used AtomAssist to navigate a complex research task focused on groundwater contamination and borehole data—critical components in environmental and nuclear facility assessments.

“I was particularly interested in groundwater contamination test results,” Ingrid shared. “I had a collection of scientific articles, reports, and field data, and I uploaded everything into AtomAssist to see how it could help.”

Once the materials were in the system, Ingrid asked AtomAssist to generate summaries, extract specific insights, and even build a clear, technical narrative. The results were impressive.

“It returned exactly what I uploaded—only now it was organized and explained in a way I could use in a report,” she said. “It saved me hours of work.”

Built for Validation

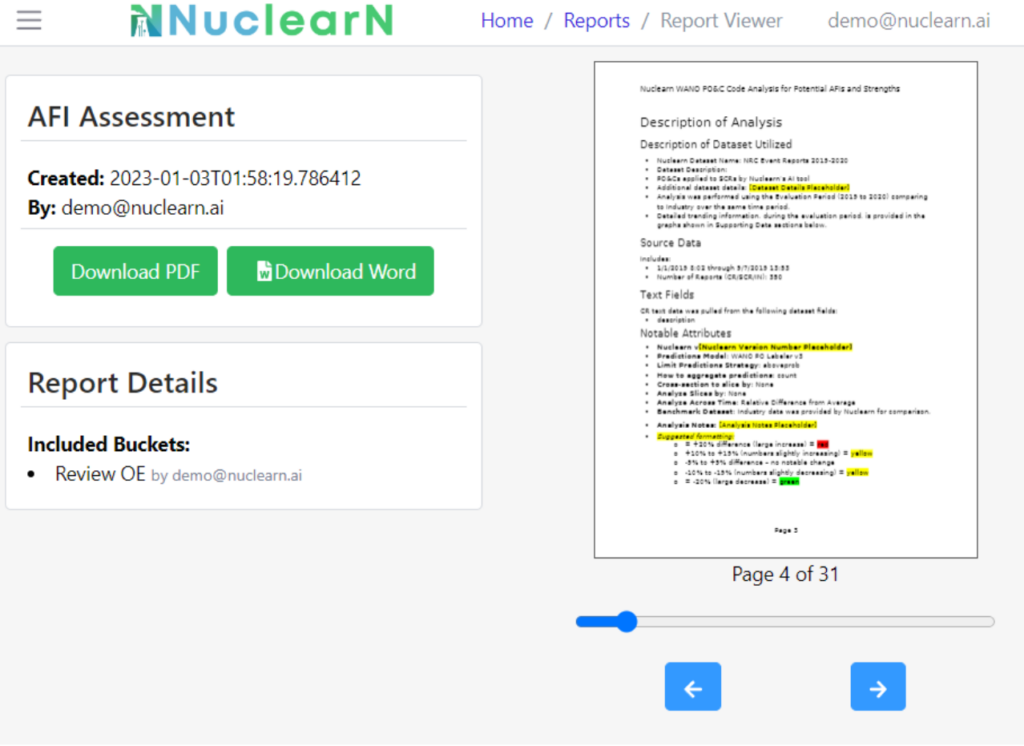

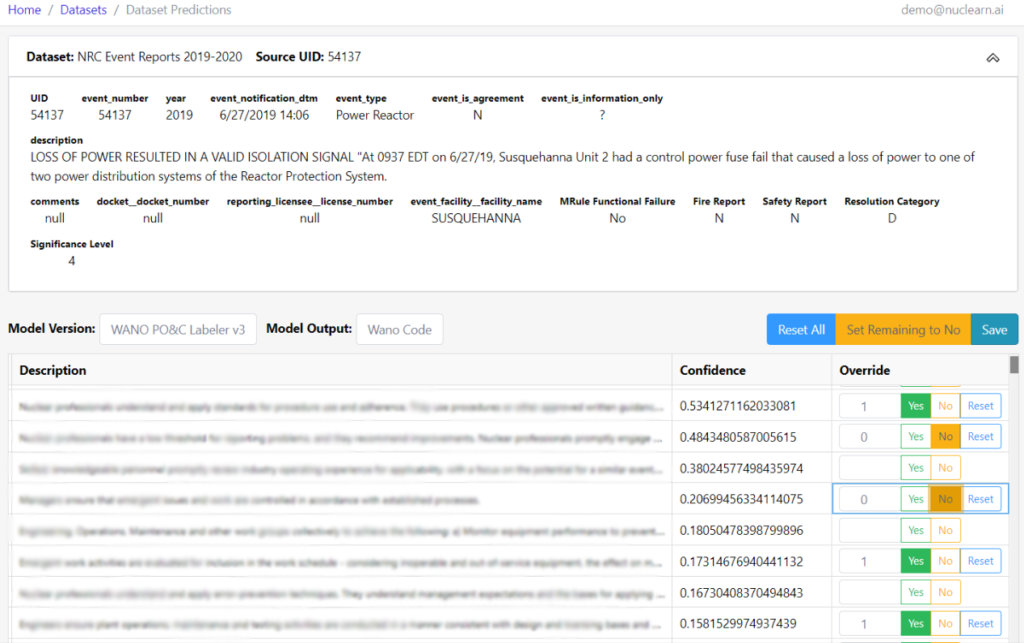

What sets AtomAssist apart is its commitment to validation. In high-risk sectors, an answer is only as good as its proof—and AtomAssist ensures every output is traceable back to original, verified source documents.

Ingrid explained how easy it was to confirm where the information was coming from:

“I clicked on the ‘Sources’ tab, and it gave me all the validation information I needed. I knew the data it was referencing was the exact documentation I had uploaded.”

This level of traceability gives teams peace of mind. When regulators or internal stakeholders ask, “Where did this come from?”—the answer is a click away.

From Raw Data to Ready-to-Use Narratives

AtomAssist doesn’t just analyze documents—it helps translate them into usable content. Ingrid was able to pull results from multiple uploaded files and ask AtomAssist to build a narrative that aligned with her technical goals.

“I wasn’t just looking for information,” she said. “I wanted information I could use right away—and that’s what AtomAssist gave me.”

The narrative tools also allow for follow-up questions, refinements, and targeted insights—so if you need a version for a technical appendix, a stakeholder update, or a management summary, the system can help build each from the same core data.

Creating Reusable Knowledge Sets

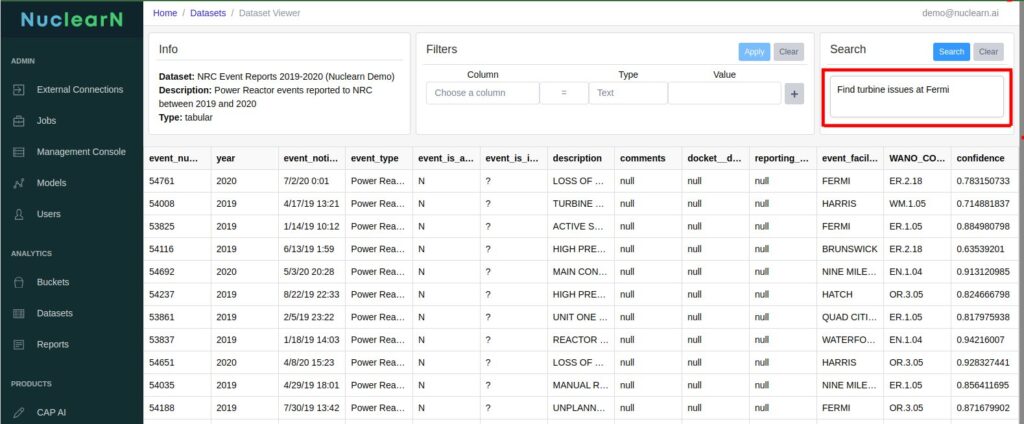

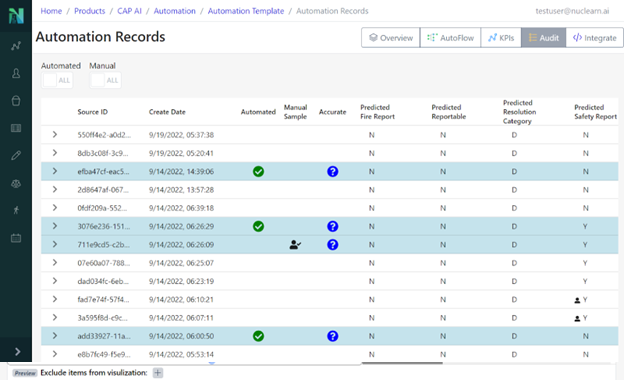

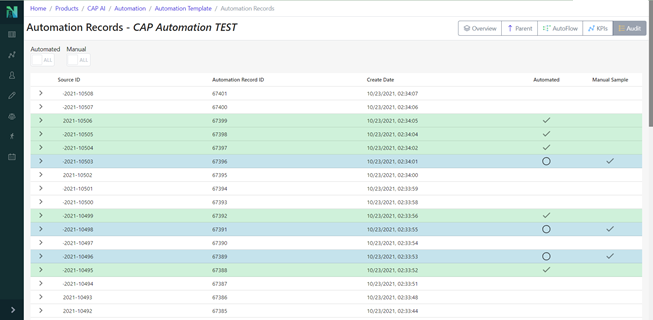

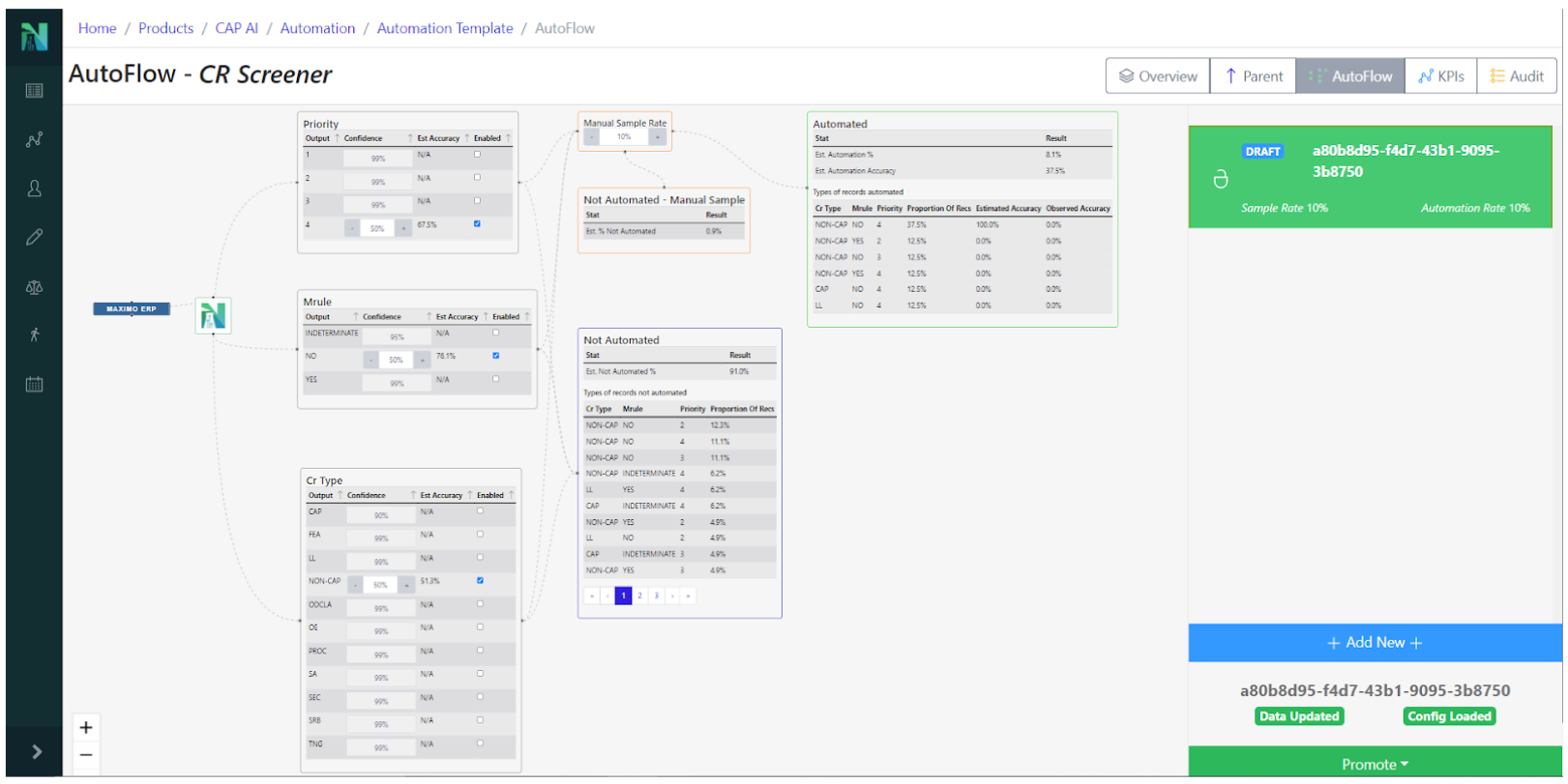

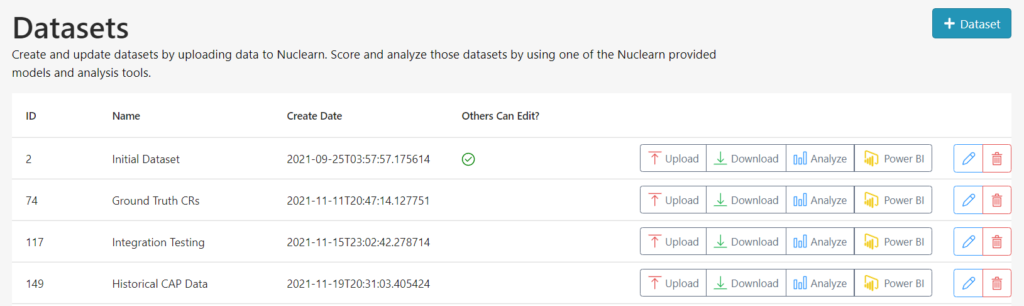

In regulated industries, the same data often needs to be used across teams and departments. One of the most powerful features Ingrid used was the ability to write extracted insights into new datasets within the AtomAssist platform.

With help from the Nuclearn team, she learned how to consolidate all validated source references into a structured dataset that could be referenced again and again.

“Now I’m thinking about how to create a single-source document that my whole team can use,” Ingrid said. “Once the content is verified and structured, AtomAssist makes it easy to pull from that data in the future.”

This capability supports knowledge retention, reduces rework, and keeps everyone aligned on the same version of the truth—without the chaos of emails, folders, or uncontrolled edits.

Precision Is a Requirement, Not a Bonus

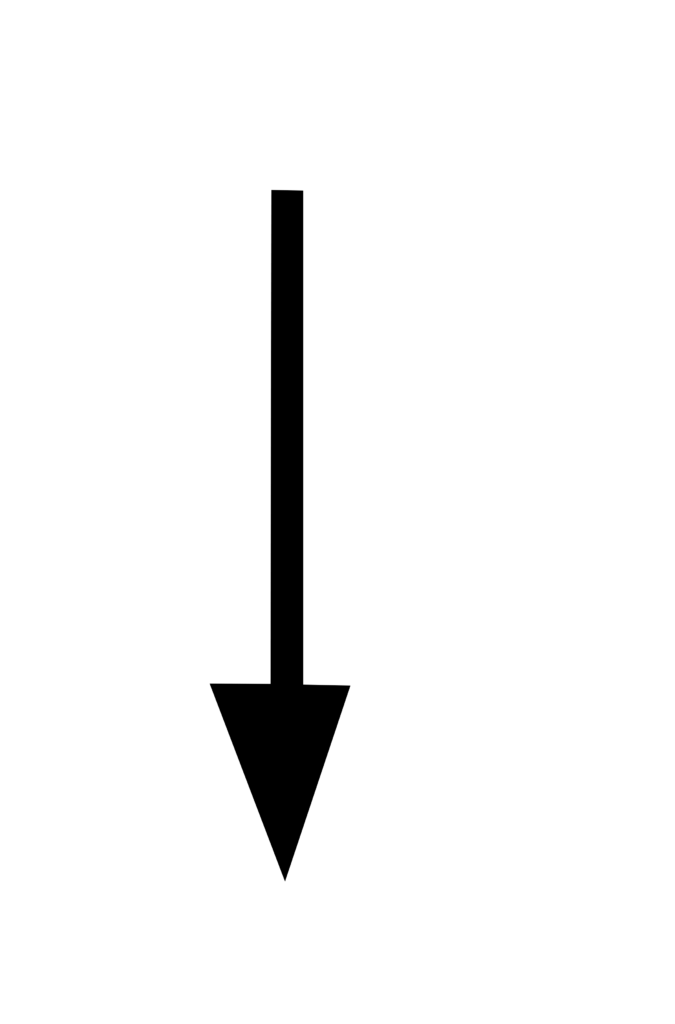

For professionals in nuclear, utilities, safety, and compliance, documentation isn’t a suggestion—it’s a system of record. Misinformation, outdated reports, or vague sourcing can have consequences ranging from delayed operations to regulatory penalties.

That’s why AtomAssist was built with precision and trust at its core. Every analysis, summary, or insight provided by the platform is grounded in what’s already approved by your organization.

It’s not searching a public database. It’s not scanning the internet. It’s referencing only the material you’ve given it—the material that meets your compliance requirements, your safety standards, and your internal review processes.

This difference is what makes AtomAssist not just useful, but essential in high-stakes environments.

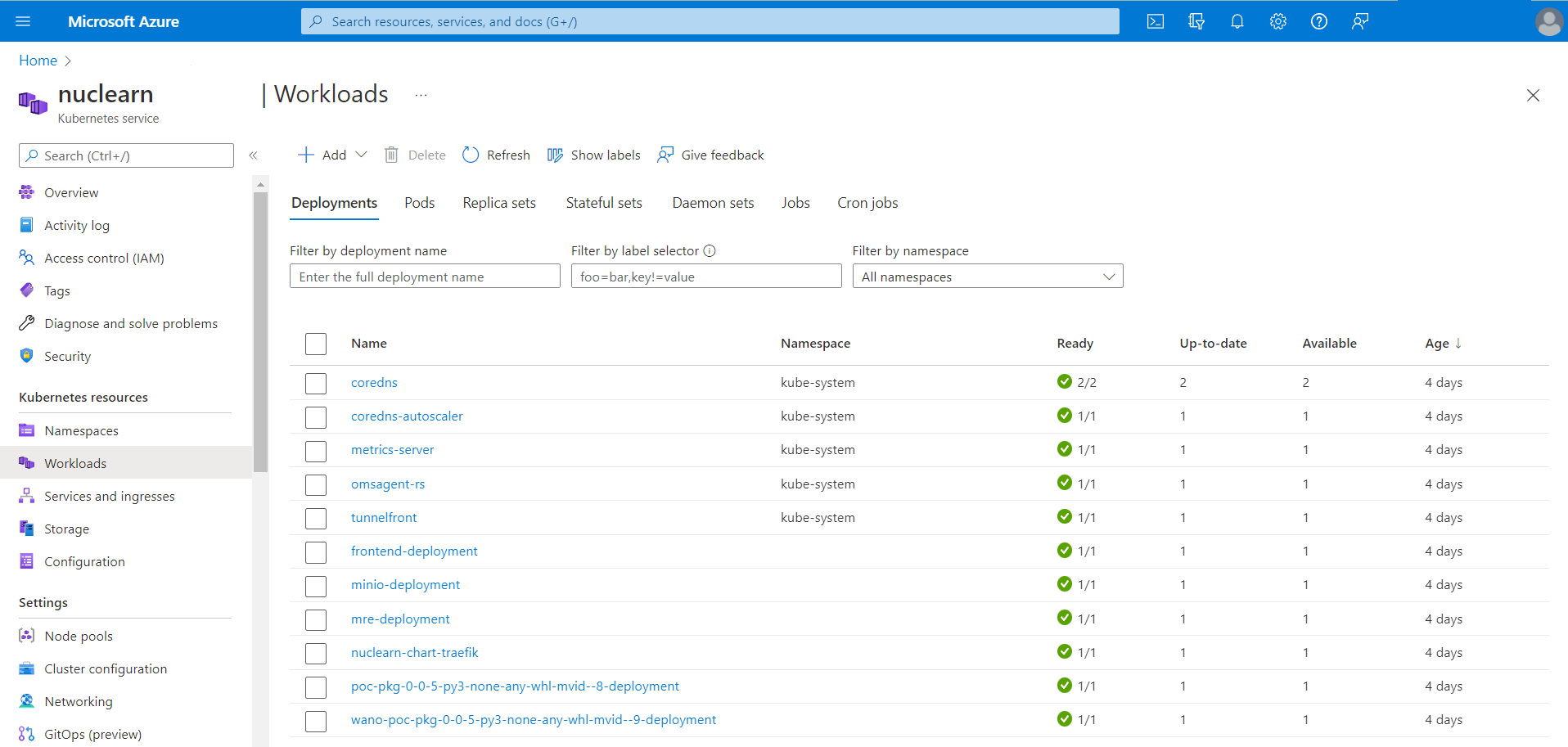

Security and Compliance by Design

AtomAssist is built for deployment in secure environments. It meets the demands of on-premise requirements, data confidentiality, and Part 810 compliance.

Whether you’re a nuclear site manager, a corrective action program lead, or an engineer managing records for regulatory filings, AtomAssist respects the boundaries and expectations of your industry.

And it doesn’t require users to learn a new interface or scripting language. It works where you work—using your documents, your taxonomy, and your subject matter.

Reducing Risk and Enhancing Productivity

Ingrid’s experience underscores what so many professionals in complex industries already know: you don’t have time to double-check everything manually—but you can’t afford to get it wrong.

AtomAssist eliminates the guesswork. It enables you to pull trusted data from your own source library, validate it instantly, and build what you need with confidence.

From policies and procedures to test reports and technical briefs, AtomAssist can support:

- Engineering & Maintenance Documentation

- Licensing and Environmental Reports

- Root Cause & Corrective Action Narratives

- Outage Preparation Materials

- Executive Summaries & Stakeholder Briefings

All while ensuring your work is based on real, validated information—not approximations.

Looking Forward: A Smarter Way to Work

What Ingrid found in AtomAssist wasn’t just an AI system. It was a work partner. One that respects the technical rigor of her field, the pressure of her deadlines, and the importance of making sure every claim is backed up.

As she put it:

“AtomAssist helped me get to what I needed faster. But more importantly, it helped me trust the process. Everything I used had validation behind it.”

For teams working in regulated industries, that level of trust is priceless.

The Bottom Line

In critical sectors, research isn’t just about finding information—it’s about defending it. Every decision, every report, and every stakeholder update must stand up to scrutiny.

That’s what AtomAssist is built for. It empowers professionals to do their best work, backed by the sources they already trust. It’s secure, compliant, and ready to be deployed in the toughest documentation environments.

So, the next time you’re preparing a report, chasing down test results, or building a summary for executive review, remember:

With AtomAssist, you’re not just answering questions. You’re building with certainty.